Saturday, 30. December 2023 Week 52

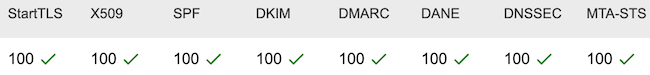

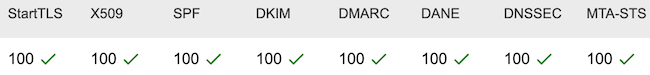

A comment on Hacker News pointed me to the MECSA tool provided by the European Union.

MECSA stands for My Email Communications Security Assessment, and is a tool to assess the security of email communication between providers.

As I run my own email server, I was curious to find out how my setup is scoring. Here are the results, seems like I'm doing a good job :-)

Link to the full report for jaggi.info: https://mecsa.jrc.ec.europa.eu/en/finderRequest/f856486ecaf94dce5e8022c0a97c63b3

Wednesday, 27. December 2023 Week 52

Recently named on my Debian server started to emit the following messages:

Dec 23 18:30:05 server named[1168203]: checkhints: view external_network: b.root-servers.net/A (170.247.170.2) missing from hints

Dec 23 18:30:05 server named[1168203]: checkhints: view external_network: b.root-servers.net/A (199.9.14.201) extra record in hints

Dec 23 18:30:05 server named[1168203]: checkhints: view external_network: b.root-servers.net/AAAA (2801:1b8:10::b) missing from hints

Dec 23 18:30:05 server named[1168203]: checkhints: view external_network: b.root-servers.net/AAAA (2001:500:200::b) extra record in hints

The reason for these warnings, is a IP change of the B root-server.

Debian is not ready yet with updating their dns-root-data package.

To fix the mismatching IP definitions on a Debian system, the current root zone definitions can also be updated manually from Internic:

curl https://www.internic.net/domain/named.root -s > /usr/share/dns/root.hints

curl https://www.internic.net/domain/named.root.sig -s > /usr/share/dns/root.hints.sig

Sunday, 10. December 2023 Week 49

Seems that after donating to Wikipedia there is a redirect to this page, which sets a cookie to no longer show the donation banners.

Sunday, 30. April 2023 Week 17

Resonating article from Mike Grindle about personal blogging and how it fits into todays Internet: Why Personal Blogging Still Rules

Before the social media craze or publishing platforms, and long before ‘content creator’ was a job title, blogs served as one of the primary forms of online expression and communication.

Everything on your blog was made to look and feel the way you wanted. If it didn’t, you rolled your sleeves up and coded that stuff in like the webmaster you were. And if the masses didn’t like it, who cared? They had no obligations to you, and you had none to them.

Hiding beneath the drivel that is Google’s search results, and all the trackers, cookies, ads and curated feeds that come with them, personal blogs and sites of all shapes and sizes are still there. They’re thriving even in a kind of interconnected web beneath the web.

The blogs on this small or “indie” web come in many shapes and sizes. […] But at their core, they all have one characteristic in common: they’re there because their owners wanted to carve out their space on the internet.

Your blog doesn’t have to be big and fancy. It doesn’t have to outrank everyone on Google, make money or “convert leads” to be important. It can be something that exists for its own sake, as your place to express yourself in whatever manner you please.

(via)

Sunday, 23. April 2023 Week 16

To automate some of the deployment steps on my personal server, I needed a tool which can be triggered by a webhook and does execute some pre-defined commands.

A classic solution for this would be to have a simple PHP script with a call to system(...). But I don't have PHP installed on the server itself and wanted this to be more lightweight than a full Apache+PHP installation.

Thus exec-hookd was born. It is a small Go daemon which listens to HTTP POST requests and runs pre-defined commands when a matching path is requested.

Its configuration lives in a small JSON file, which lists the port to listen on and the paths together with their commands to execute:

{

"Port": 8059,

"HookList": [

{

"Path": "/myhook",

"Exec": [

{

"Cmd": "/usr/bin/somecmd",

"Args": [

"--some",

"arguments"

],

"Timeout": "5s"

}

]

}

]

}

The commands are called with a timeout after which they are stopped to avoid that things hang around forever.

Sunday, 16. April 2023 Week 15

Ralf tooted a nice and tidy git log output alias for the console:

alias glg="git log --graph --pretty=format:'%Cred%h%Creset -%C(yellow)%d%Creset %s %Cgreen(%cr) %C(bold blue)<%an>%Creset' --abbrev-commit"

Saturday, 18. March 2023 Week 11

Found this inspiring blog post about how to use your own domain for Docker images. (via HN)

It explains how to use your own domain with redirects such that the Docker registry hosting the images can be changed easily. Your domain is only used for issueing HTTP redirects, so that the actual data storage and transfer happens directly with the Docker registry.

The blog post comes with a sample implementation for Caddy. As my server is running nginx, I used the following config snippet to achieve the same result:

server {

listen 443 ssl;

listen [::]:443 ssl;

server_name docker.x-way.org;

access_log /var/log/nginx/docker.x-way.org.access.log;

error_log /var/log/nginx/docker.x-way.org.error.log;

ssl_certificate /etc/letsencrypt/live/docker.x-way.org/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/docker.x-way.org/privkey.pem;

location / {

return 403;

}

location = /v2 {

add_header Cache-Control 'max-age=300, must-revalidate';

return 307 https://registry.hub.docker.com$request_uri;

}

location = /v2/ {

add_header Cache-Control 'max-age=300, must-revalidate';

return 307 https://registry.hub.docker.com$request_uri;

}

location = /v2/xway {

add_header Cache-Control 'max-age=300, must-revalidate';

return 307 https://registry.hub.docker.com$request_uri;

}

location /v2/xway/ {

add_header Cache-Control 'max-age=300, must-revalidate';

return 307 https://registry.hub.docker.com$request_uri;

}

}

Quickly tested it with some docker pull commands and already integrated it into the build process of dnsupd.

Thursday, 26. January 2023 Week 4

Here's a bit older mashup. Happy Australia Day!

Wednesday, 18. January 2023 Week 3

Let's Encrypt recently introduced support for ACME-CAA.

I've now extended my existing CAA DNS entries with the ACME-CAA properties:

% dig +short -t CAA x-way.org

0 issue "letsencrypt.org; accounturi=https://acme-v02.api.letsencrypt.org/acme/acct/68891730; validationmethods=http-01"

0 issue "letsencrypt.org; accounturi=https://acme-v02.api.letsencrypt.org/acme/acct/605777876; validationmethods=http-01"

The effect of this is that Let's Encrypt will only grant a signed TLS certificate if the request comes from one of my two accounts (authenticated with the corresponding private key).

If the certificate request comes from a different account, no TLS certificate will be granted.

This protects against man-in-the-middle attacks, specifically against attacks where someone between Let's Encrypt and my server would be trying to impersonate my server to obtain a signed TLS certificate.

Addendum:

In case you're wondering where to get the accounturi value from, it can be found in your account file:

% cat /etc/letsencrypt/accounts/acme-v02.api.letsencrypt.org/directory/*/regr.json

{"body": {}, "uri": "https://acme-v02.api.letsencrypt.org/acme/acct/605777876"}

Tuesday, 10. January 2023 Week 2

Added a JSON Feed to this blog (in additon to the existing RSS and Atom feeds): https://blog.x-way.org/feed.json

To build the proper JSON file, I used this Jekyll template and the JSON Feed validator.

Tuesday, 3. January 2023 Week 1

For a temporary log analysis task, I wanted to get the last 24h of logs from a Postfix logfile.

To achieve this I came up with the following AWK oneliner (which fails in spectacular ways around new years):

awk -F '[ :]+' 'BEGIN{m=split("Jan|Feb|Mar|Apr|May|Jun|Jul|Aug|Sep|Oct|Nov|Dec",d,"|"); for(o=1;o<=m;o++){months[d[o]]=sprintf("%02d",o)}} mktime(strftime("%Y")" "months[$1]" "sprintf("%02d",$2+1)" "$3" "$4" "$5) > systime()'

This is then used in a cronjob to get a pflogsumm summary of the last 24h:

cat /var/log/mail.log | awk -F '[ :]+' 'BEGIN{m=split("Jan|Feb|Mar|Apr|May|Jun|Jul|Aug|Sep|Oct|Nov|Dec",d,"|"); for(o=1;o<=m;o++){months[d[o]]=sprintf("%02d",o)}} mktime(strftime("%Y")" "months[$1]" "sprintf("%02d",$2+1)" "$3" "$4" "$5) > systime()' | pflogsumm