Open Source on Mars

Received a badge from GitHub's Open Source on Mars initiative :-)

Received a badge from GitHub's Open Source on Mars initiative :-)

From the article on Security Boulevard.

This website now also serves a security.txt file which is a standardized way of making security contact information available. (Wikipedia)

The file is available in two locations /security.txt (the classic location) and /.well-known/security.txt (the standard location following RFC8615).

To easily add the file on all my domains, I'm using the following nginx config snippet.

location /security.txt { add_header Content-Type 'text/plain'; add_header Cache-Control 'no-cache, no-store, must-revalidate'; add_header Pragma 'no-cache'; add_header Expires '0'; add_header Vary '*'; return 200 "Contact: mailto:andreas+security.txt@jaggi.info\nExpires: Tue, 19 Jan 2038 03:14:07 +0000\nEncryption: http://andreas-jaggi.ch/A3A54203.asc\n"; } location /.well-known/security.txt { add_header Content-Type 'text/plain'; add_header Cache-Control 'no-cache, no-store, must-revalidate'; add_header Pragma 'no-cache'; add_header Expires '0'; add_header Vary '*'; return 200 "Contact: mailto:andreas+security.txt@jaggi.info\nExpires: Tue, 19 Jan 2038 03:14:07 +0000\nEncryption: http://andreas-jaggi.ch/A3A54203.asc\n"; }

This snippet is stored in a dedicated file (/etc/nginx/conf_includes/securitytxt) and is included in the various server config blocks like this:

server { server_name example.com; include /etc/nginx/conf_includes/securitytxt; location / { # rest of website } }

The default configuration of snmpd on Debian has debug level logging enabled and thus we end up with a constant flood of these messages in /var/log/syslog

snmpd[19784]: error on subcontainer 'ia_addr' insert (-1)

The fix is to lower the logging level, which can be accomplished like this on systems with systemd:

cp /lib/systemd/system/snmpd.service /etc/systemd/system/snmpd.service sed -i 's/Lsd/LS6d/' /etc/systemd/system/snmpd.service systemctl daemon-reload systemctl restart snmpd

On systems without systemd, the logging level is set by the init script (unless explicitly configured in /etc/default/snmpd), and can be changed like this:

sed -i 's/Lsd/LS6d/g' /etc/default/snmpd sed -i 's/Lsd/LS6d/g' /etc/init.d/snmpd service snmpd restart

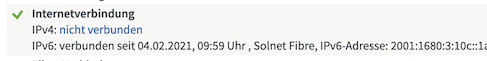

This was the initial state of my new SolNet fibre connection:

As I am a proponent of IPv6 this made me very happy, but unfortunately about 20% of my daily websites only offer legacy Internet (which later on I got working as well).

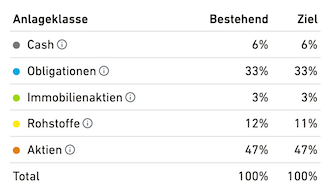

TrueWealth hat's nicht so mit Prozentrechnen:

Sunrise does not like when people run their own router on a fiber line. While they do not directly forbid it, they don't provide any of the required configuration parameters which makes it quite hard to use your own router.

Below you'll find the required configuration parameters to make your own router connect with IPv4 and IPv6 on a Sunrise fiber line.

Beware though that especially the VLAN configuration might be different depending on your city, the following worked for me in Zürich.

Also, please note that I do not recommend Sunrise as Internet provider (as a matter of fact I'm on the way out of their contract and switching to SolNet).

Besides not supporting to bring your own router, they also like to make up additional early-termination fees (the contract states 100CHF early termination fees, but once you call them to initiate the process they tell you that it's gonna cost 300CHF as they decided to change their pricing structure unilaterally).

Enough of the ranting, now to the interesting part :-)

The Sunrise line has multiple VLANs to differentiate between Internet, Phone and TV services.

To receive an IPv4 address it requires a special value for the Client Identifier DHCP option.

For IPv6 6rd is employed, for which we need to know the prefix and gateway address.

The following configuration was tested with a MikroTik CRS125 router starting from the default settings.

For simplicity I've named the network interfaces according to their intended usage (eg. LAN, sunrise and 6rd).

The first step is to configure the VLAN on top of your fiber interface. In my case it was VLAN ID 131, others were also successful with VLAN ID 10.

/interface vlan add interface=sfp1-gateway name=sunrise vlan-id=131

Next let's put in place some basic firewall rules to make sure we're not exposing our LAN to the Internet once the connection comes up.

/ip firewall filter add action=accept chain=forward connection-state=established,related add action=accept chain=forward in-interface=LAN out-interface=sunrise add action=drop chain=forward add action=accept chain=input connection-state=established,related add action=accept chain=input protocol=icmp add action=drop chain=input in-interface=!LAN

/ip firewall nat add action=masquerade chain=srcnat out-interface=sunrise

Now we can configure the special value for the Client Identifier DHCP option and configure the DHCP client on the VLAN interface.

/ip dhcp-client option add code=61 name=clientid-sunrise value="'dslforum.org,Fast5360-sunrise'"

/ip dhcp-client add dhcp-options=clientid-sunrise disabled=no interface=sunrise

This should now give us IPv4 Internet connectivity. We can test this by checking that we received an IPv4 address, have an IPv4 default route and that we can ping a host in the Internet.

/ip dhcp-client print Flags: X - disabled, I - invalid, D - dynamic # INTERFACE USE-PEER-DNS ADD-DEFAULT-ROUTE STATUS ADDRESS 0 sunrise yes yes bound 198.51.100.123/25

/ip route check 1.1

status: ok

interface: sunrise

nexthop: 198.51.100.1

/ping count=1 1.1

SEQ HOST SIZE TTL TIME STATUS

0 1.0.0.1 56 59 1ms

sent=1 received=1 packet-loss=0% min-rtt=1ms avg-rtt=1ms max-rtt=1ms

Sunrise doesn't offer native IPv6 connectivity but employs 6rd (which defines how to create a 6to4 tunnel based on the public IPv4 address, an IPv6 prefix and the tunnel gateway).

Before we setup the 6rd tunnel, it's important to put in place firewall rules for IPv6 as afterwards all devices on the local network will receive a public IPv6 address.

/ipv6 firewall filter add action=accept chain=forward connection-state=established,related add action=accept chain=forward in-interface=LAN add action=drop chain=forward add action=accept chain=input connection-state=established,related add action=accept chain=input protocol=icmpv6 add action=drop chain=input

To setup the 6rd tunnel, I've modified an existing script with the specific parameters for Sunrise (namely the 2001:1710::/28 prefix and the 212.161.209.1 tunnel gateway address).

The script creates the tunnel interface, configures an IPv6 address on the external interface, configures an IPv6 address on the internal interface (which also enables SLAAC to provide IPv6 addresses to the clients on the local network) and configures an IPv6 default route over the 6rd tunnel.

The script itself will be run via the scheduler, thus let's save it under the name 6rd-script.

:global ipv6localinterface "LAN"

:global uplinkinterface "sunrise"

:global IPv4addr [/ip address get [find interface=$uplinkinterface] address];

:global IPv4addr [:pick $IPv4addr 0 [:find $IPv4addr "/"]]

:global IPv4addr2 [:pick $IPv4addr 0 30]

:global IPv6temp [:toip6 ("1::" . $IPv4addr2)]

:global IPv4hex1 [:pick $IPv6temp 3 4]

:global IPv4hex2 [:pick $IPv6temp 4 7]

:global IPv4hex3 [:pick $IPv6temp 8 9]

:global IPv4hex4 [:pick $IPv6temp 9 12]

:global IPv6addr [("2001:171" . $IPv4hex1 . ":". $IPv4hex2 .$IPv4hex3 . ":" . $IPv4hex4 . "0::1/64")]

:global IPv6addrLoc [("2001:171" . $IPv4hex1 . ":". $IPv4hex2 . $IPv4hex3 . ":" . $IPv4hex4 . "1::1/64")]

#6to4 interface

:global 6to4id [/interface 6to4 find where name="6rd"]

:if ($6to4id!="") do={

:global 6to4addr [/interface 6to4 get $6to4id local-address]

if ($6to4addr != $IPv4addr) do={ :log warning "Updating local-address for 6to4 tunnel '6rd' from '$6to4addr' to '$IPv4addr'."; /interface 6to4 set [find name="6rd"] local-address=$IPv4addr }

} else { :log warning "Creating 6to4 interface '6rd'. "; /interface 6to4 add !keepalive local-address=$IPv4addr mtu=1480 name="6rd" remote-address=212.161.209.1 }

#ipv6 for uplink

:global IPv6addrnumber [/ipv6 address find where comment="6rd" and interface="6rd"]

:if ($IPv6addrnumber!="") do={

:global oldip ([/ipv6 address get $IPv6addrnumber address])

if ($oldip != $IPv6addr) do={ :log warning "Updating 6rd IPv6 from '$oldip' to '$IPv6addr'."; /ipv6 address set number=$IPv6addrnumber address=$IPv6addr disabled=no }

} else {:log warning "Setting up 6rd IPv6 '$IPv6addr' to '6rd'. "; /ipv6 address add address=$IPv6addr interface="6rd" comment="6rd" advertise=no }

#ipv6 for local

:global IPv6addrnumberLocal [/ipv6 address find where comment=("6rd_local") and interface=$ipv6localinterface]

:if ($IPv6addrnumberLocal!="") do={

:global oldip ([/ipv6 address get $IPv6addrnumberLocal address])

if ($oldip != $IPv6addrLoc) do={ :log warning "Updating 6rd LOCAL IPv6 from '$oldip' to '$IPv6addrLoc'."; /ipv6 address set number=$IPv6addrnumberLocal address=$IPv6addrLoc disabled=no }

} else {:log warning "Setting up 6rd LOCAL IPv6 '$IPv6addrLoc' na '$ipv6localinterface'. "; /ipv6 address add address=$IPv6addrLoc interface=$ipv6localinterface comment="6rd_local" advertise=yes }

#ipv6 route

:global routa [/ipv6 route find where dst-address="2000::/3" and gateway="6rd"]

if ($routa="") do={ :log warning "Setting IPv6 route '2000::/3' pres '6rd'. "; /ipv6 route add distance=1 dst-address="2000::/3" gateway="6rd" }

Once we've added the script we also need to create the scheduler entry to run it periodically (as it needs to re-configure the tunnel and addresses whenever the public IPv4 address changes).

/system scheduler add interval=1m name=schedule1 on-event=6rd-script

After the first run of the script we should now have IPv6 connectivity. Let's test this again by checking that we have a public IPv6 address, an IPv6 default route and can ping an IPv6 host in the Internet.

/ipv6 address print where interface=6rd and global

Flags: X - disabled, I - invalid, D - dynamic, G - global, L - link-local

# ADDRESS FROM-POOL INTERFACE ADVERTISE

0 G ;;; 6rd

2001:171c:6336:47b0::1/64 6rd no

/ipv6 route check 2600::

status: ok

interface: 6rd

nexthop: 2600::

/ping count=1 2600::

SEQ HOST SIZE TTL TIME STATUS

0 2600:: 56 50 118ms echo reply

sent=1 received=1 packet-loss=0% min-rtt=118ms avg-rtt=118ms max-rtt=118ms

And that's how you can configure and validate IPv4 and IPv6 connectivity with your own router on a Sunrise fiber line despite them not liking it very much ;-)

Recently I added MTA-STS support to one of my domains, and it turns out that this was easier than expected.

MTA-STS is used to tell mail senders that your server supports TLS. And then you can define the policy for your server and tell them that they should only use TLS (resp. STARTTLS) when connecting to you and not fall back to unencrypted SMTP.

The way this works is with two components:

_mta-sts.<your-site.com> TXT DNS entry indicating that your domain supports MTA-STS and the version number of your MTA-STS policyhttps://mta-sts.<your-site.com>/.well-known/mta-sts.txt containing your MTA-STS policy (which mx hosts it is valid for, should it be run in enforcing or testing mode, max-age etc.)The idea is that a mail sender checks your MTA-STS policy through protected channels (DNSSEC, HTTPS) and then never sends mails to you in plaintext (similar approach as HSTS for HTTP but this time between mail servers).

To setup the MTA-STS configuration, I followed this Enable MTA-STS in 5 Minutes with NGINX guide from Yoonsik Park.

Then to check my configuration I used this MTA-STS validator (which is an opensource project available on GitHub), the classic checktls.com //email/testTo: tool (MTA-STS checking needs to be explicitly enabled under 'More Options') and the free testing service provided by Hardenize.

15 years ago this weblog received the current o5 design (or theme as it would be called nowadays).

During this time the design has aged quite well and also survived the move of the backend from a self-written PHP blog-engine to Jekyll.

Although it still works surprisingly well and presents the content nicely every day, there are some parts where better usage of contemporary technologies would be desirable.

It has no mobile version nor a responsive layout as the design was created before the now omnipresent smartphones were invented. Similar is the font-size hardcoded and not very adequate for todays retina displays. And yes, it uses the XHTML 1.0 strict standard with all its quirks and CSS tricks from 2002 (which luckily are still supported in current browsers).

Overall I'm quite happy that the o5 design has turned out to be so timeless and that I did not have to come up with a new one every other year (btw: I don't remember where the o5 name came from, likely the 5 is a reference to 2005 when it was created).

With the current Corona situation forcing me to spend more time at home again, I have the feeling that some things might change around the weblog (not quite sure what or when exactly, first I need to re-learn how websites are built in 2020 :-).

Compared to the previous post where intentional NAT traversal was discussed, here now comes an article about 'unintentional' (malicious) NAT traversal.

Samy Kamar describes in his NAT Slipstreaming article how a combination of TCP packet segmentation and smuggling SIP requests in HTTP, can be used to trick the NAT ALG of your router into opening arbitrary ports for inbound connections from the Internet to your computer.

The article analyses in detail the SIP ALG of the Linux netfilter stack in it's default configuration, but likely similar attacks could also be possible with ALGs of other protocols and vendors.

Important to note: the Linux SIP ALG module has two parameters (sip_direct_media and sip_direct_signalling), which restrict the IP address for which additional ports are opened to the one sending the original SIP packet. By default they are set to 1, but if any of these is set to 0 in a router's configuration, the described NAT Slipstreaming attack will not only allow to make inbound connections to your computer, but also to any other device in the local network!